Mohamed Abdelfattah

I am a computer vision Ph.D. student at EPFL VITA lab, fortunately advised by Alexandre Alahi.

My research focuses on Representation Learning in Multimodal LLMs and Self-Supervised Transformer Foundation Models to address a central challenge in video understanding: learning temporally grounded, cross-modal representations without relying on costly supervision or brittle alignment objectives. I design predictive pretraining strategies that enable models to reason over video, audio, motion, and language in a unified and transferable way.

I was an AI Research Scientist Intern at Meta Reality Labs (Summer 2025) where I worked with Edoardo Remelli and Shugao Ma on building Multimodal LLMs for smart wearable systems. Previously, I was a deep learning visiting research student at Boston University, collaborating with Sarah Adel Bargal and a computer vision research intern the KAUST Vision-Cair group, working with Mohamed Elhoseiny.

I received my B.Sc. in Computer Engineering from The American University in Cairo in 2022 (highest honors, Dean's list).

Email / CV / LinkedIn / Google Scholar / Github

I am actively looking for internship positions in computer vision, LLMs, and deep learning. Feel free to reach out to me!

Mohamed Abdelfattah*,

NeurIPS 2025

@inproceedings{abdelfattahoskar,

title={OSKAR: Omnimodal Self-supervised Knowledge Abstraction and Representation},

author={Abdelfattah, Mohamed O and Messaoud, Kaouther and Alahi, Alexandre},

booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems}

}In one sentence: OSKAR is a self-supervised multimodal foundation model that learns in the latent space by predicting masked multimodal features.

Mohamed Abdelfattah,

ECCV 2024

@inproceedings{abdelfattah2024sjepa,

author={Abdelfattah, Mohamed and Alahi, Alexandre},

booktitle={European Conference on Computer Vision (ECCV)},

title={S-JEPA: Joint Embedding Predictive Architecture for Self-Supervised Skeletal Action Recognition},

year={2024},

organization={Springer},

}In one sentence: S-JEPA is an instatiation of the Joint Embedding Predictive Architecture (JEPA) for self-supervised skeletal action recognition.

Mohamed Abdelfattah,

CVPR 2024

@inproceedings{abdelfattah2024maskclr,

title={MaskCLR: Attention-Guided Contrastive Learning for Robust Action Representation Learning},

author={Abdelfattah, Mohamed and Hassan, Mariam and Alahi, Alexandre},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={18678--18687},

year={2024}

}In one sentence: MaskCLR improves the robustness of transformer-based action recognition methods against noisy and incomplete skeletons.

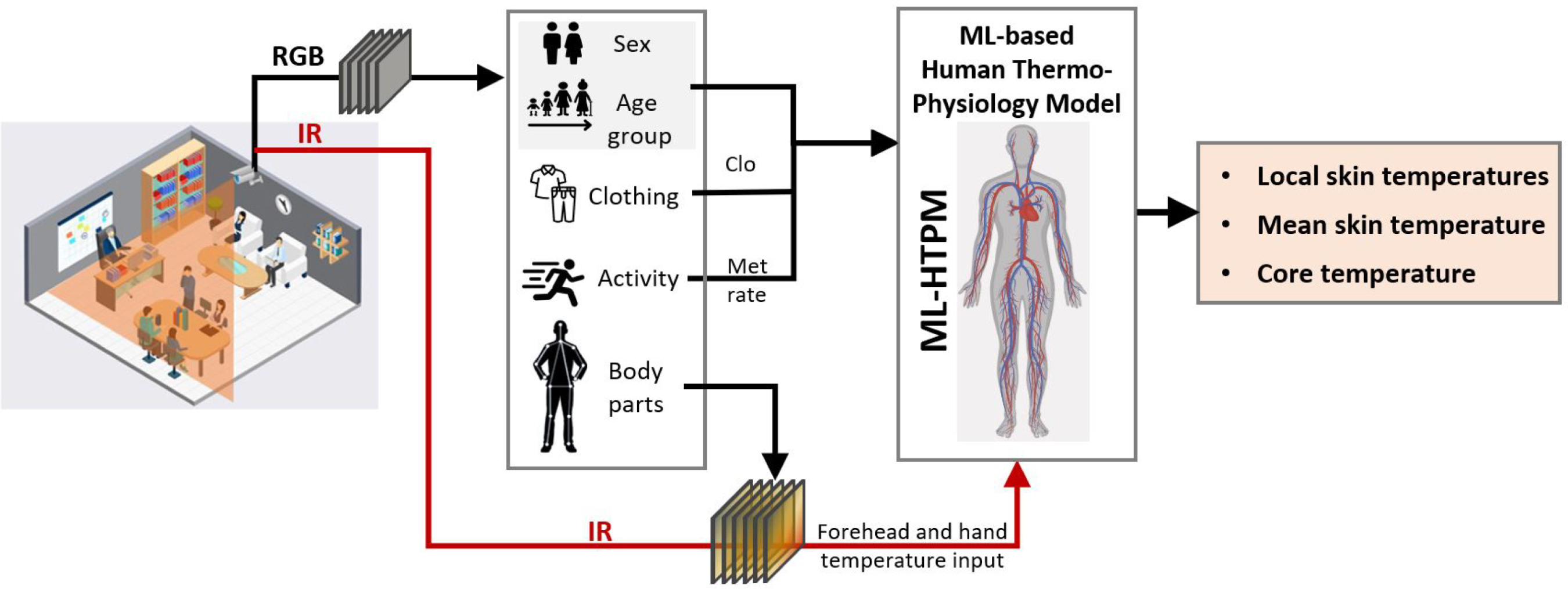

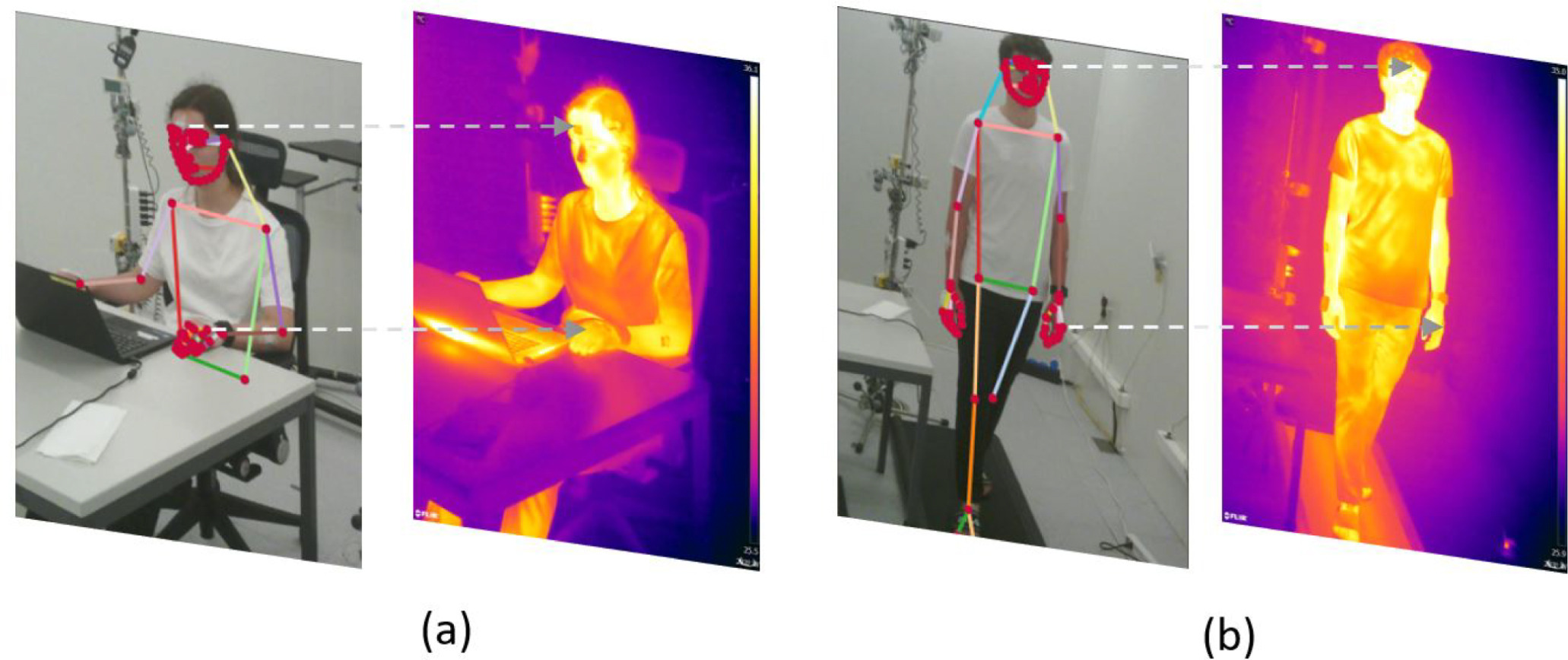

Building and Environment, 2023

@article{rida2023toward,

title={Toward contactless human thermal monitoring: A framework for Machine Learning-based human thermo-physiology modeling augmented with computer vision},

author={Rida, Mohamad and Abdelfattah, Mohamed and Alahi, Alexandre and Khovalyg, Dolaana},

journal={Building and Environment},

volume={245},

pages={110850},

year={2023},

publisher={Elsevier}

}In one sentence: A machine-learning human thermo-physiology model (ML-HTPM) developed to predict thermal response.

18th Healthy Buildings Europe Conference, 2023

@article{rida2023toward,

title={Toward contactless human thermal monitoring: A framework for Machine Learning-based human thermo-physiology modeling augmented with computer vision},

author={Rida, Mohamad and Abdelfattah, Mohamed and Alahi, Alexandre and Khovalyg, Dolaana},

journal={Building and Environment},

volume={245},

pages={110850},

year={2023},

publisher={Elsevier}

}In one sentence: In this study, we applied multi-modal non-intrusive computer vision algorithms to extract personal features such as the clothing ensemble, activity level, posture, sex, age, and skin temperature as a human’s thermal comfort defining parameters.

EMNLP 2022

@InProceedings{mohamed2022artelingo,

title={ArtELingo: A Million Emotion Annotations of WikiArt with Emphasis

on Diversity over Language and Culture},

author={Mohamed, Youssef and Abdelfattah, Mohamed and Alhuwaider, Shyma and Li, Feifan

and Zhang, Xiangliang and Church, Kenneth Ward and Elhoseiny, Mohamed},

booktitle = {Proceedings of the 2022 Conference on Empirical Methods

in Natural Language Processing (EMNLP)}

year={2022}}In one sentence: This paper introduces ArtELingo, a new benchmark and dataset, designed to encourage work on diversity across languages and cultures.

CVPR 2022

@inproceedings{bashkirova2022zerowaste,

title={Zerowaste dataset: Towards deformable object segmentation in cluttered scenes},

author={Bashkirova, Dina and Abdelfattah, Mohamed and Zhu, Ziliang and Akl, James and Alladkani, Fadi and Hu, Ping and Ablavsky, Vitaly and Calli, Berk and Bargal, Sarah Adel and Saenko, Kate},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={21147--21157},

year={2022}

}In one sentence: we take a step towards computer-aided waste detection and present the first in-the-wild industrial-grade waste detection and segmentation dataset, ZeroWaste.

- [2023 & 2024] Head Teaching Assistant (TA) of Deep Learning for Autonomous Vehicles at EPFL.

- [2022] Deep Learning Teaching Assistant at AUC

- [2021 & 2022] Computer Architecture Teaching Assistant at AUC

- [2022] Awarded the AUC PA Cup for the class of 2022 for top academic and extracurricular achievements.

- [2022] High Academic Achievement (Top 10 % of Graduating Class) in SSE Honours Assembly | AUC.

- [2021] Undergraduate Research Award, 4,000 USD | AUC.

- [2019] Ranked among the top 10 ROV teams in the Middle East | MATE ROV Regional Competition.

- [2018] Ranked 1st in the CSCE programming contest | AUC.

- [2018] Named Highest Achiever and Reader of the Year | AUC.

|

Template adapted from Jon Barron's and Siwei Zhang's websites. |